Algorithmic explainability for transparent AI

With machine learning methods becoming more widespread, it’s important to be able to explain and confirm the algorithms’ results. In this regard, the GDPR, and regulations as a whole, set audit trails on algorithms’ decision-making patterns. But machine learning methods are vulnerable and prone to cyber attacks – risks that must be counteracted.

All these concerns reflect a general drive towards greater transparency in artificial intelligence. Our mission is to provide key explainability methods to improve the transparency and interpretability of AI solutions.

Our vision

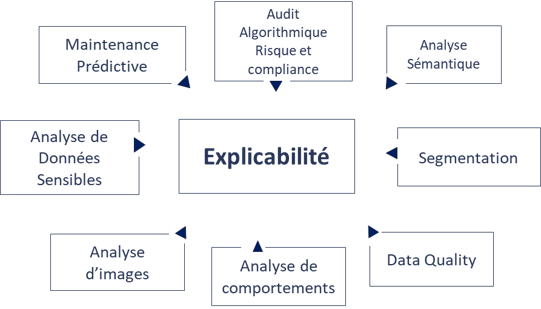

Algorithmic explainability is a cross-functional discipline whose complexity varies depending on the AI solution to which it is applied.

The goal of our value proposition is to integrate explainability within the standard developmental and deployment process of AI solutions. Doing so would favour a widespread adoption of AI, ensure a more accurate interpretation of results and lead to greater confidence in these technologies.

The various areas of application of algorithmic explainability:

Our strenghts

A turnkey archive for explainability

Explainability is a new discipline – as such, some approaches are still being studied and tested. Our expertise has enabled us to assemble a package of solutions that can be easily deployed by companies’ AI teams.

High-level AI expertise

Through our clients’ projects and various AI solutions, our AI expertise allows us to put forward applicable explainability solutions of manageable complexity, with an integrated and scalable architecture.

An AI LAB for experimental research

HeadMind LAB’s mission is to test, audit, and challenge explainability approaches. Graphene offers companies the best approaches based on their context, AI solutions and IT infrastructure.

Contactez nos experts

Merci de l’intérêt que vous portez à HeadMind Partners. Veuillez remplir le formulaire pour nous transmettre votre question.